Every business owner we speak with is asking about AI. And fair enough—the potential is real.

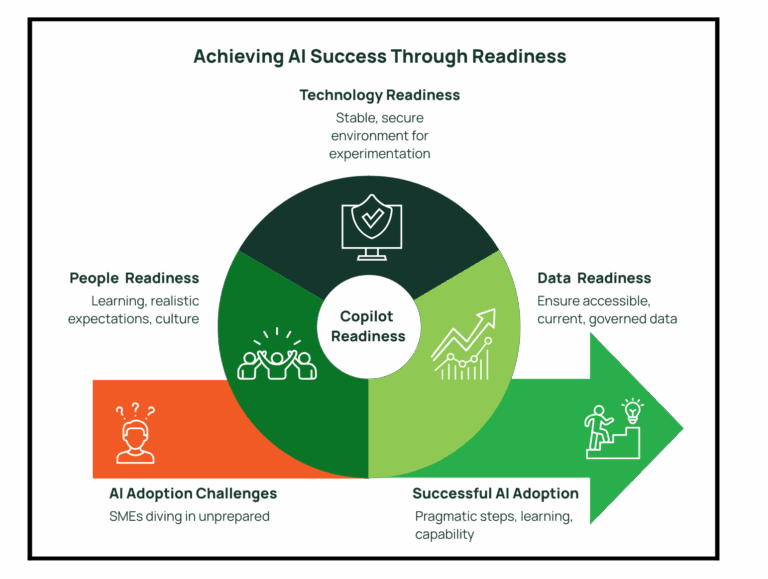

But here’s what we’re seeing: most SMEs are diving into AI tools without checking whether they’re actually ready to get value from them.

The good news? You don’t need a comprehensive AI strategy or a transformation roadmap. Most organisations are finding their way with AI one step at a time—trying Copilot here, experimenting with automation there, learning as they go.

But there are three fundamental questions that determine whether those experiments will succeed or flounder. Get these Copilot readiness fundamentals right, and you’re set up to learn and adapt. Get them wrong, and you’ll waste time and money on AI tools that don’t deliver.

Question 1: Is Your Organisational Data AI-Ready?

This is the foundation everything else is built on. AI is only as good as the data it can access and understand. Yet this is where we see the biggest gap between expectation and reality.

Ask yourself three things:

Is your data accessible? If your critical business information lives in local drives, personal folders, or filing cabinets, AI can’t help you. For AI to be useful, your data needs to be in connected systems—SharePoint, OneDrive, your line-of-business applications. Not everything needs to be perfect, but the information your team actually uses daily should be digitally accessible. (Learn how SharePoint can transform your information management.)

Is your data current and clean? Most organisations have years of digital clutter. Old versions of documents. Outdated information no one’s touched since 2015. Duplicate files scattered across different locations. When AI tools search through that mess, they can’t tell what’s current and what’s obsolete. You don’t need to clean everything overnight, but you do need to know where your source of truth sits for important information.

Is your data properly governed? Here’s the kicker: AI respects the same permissions your people do. If your SharePoint permissions are a mess—where anyone can access anything, or critical information is locked down so tightly that no one can find it—those same problems will plague your AI tools like Copilot. Good AI outcomes require good information governance.

Good information governance means having clear ownership of information, consistent permission structures, and documented policies about who should access what. For example, your finance team should be able to access budget documents while keeping them restricted from general staff—and those same boundaries will guide what Copilot can surface for different users.

The reality check: If someone new joined your team today, could they find the information they need within your digital environment? If the answer is “not really,” then AI won’t fare much better.

Once your data foundation is solid, the next question is whether your technology environment can support safe experimentation.

Question 2: Is Your Technology Foundation Ready for Safe Experimentation?

You don’t need cutting-edge infrastructure to benefit from AI. But you do need an environment that’s stable and secure enough to turn on AI features without creating new risks. Copilot readiness starts with your technology foundation.

Is your Microsoft 365 environment in good shape? For most SMEs, AI adoption starts with the Microsoft ecosystem—Copilot in Word, Teams, Outlook. But if your M365 environment has security gaps, inconsistent configurations, or systems that don’t talk to each other properly, you’re building on shaky ground. Basic hygiene matters: multi-factor authentication, proper licensing, security policies that actually work.

(For detailed guidance: Discover which M365 security features you should be using to protect your Copilot deployment)

Can you pilot safely? The beauty of modern AI tools is that you can test small before committing big. But that requires an environment where you can trial features with a subset of users, measure what happens, and roll back if needed. If your systems are so fragile that any change creates chaos, you can’t learn effectively.

Do you have the right guardrails? AI amplifies everything—including security risks. Before you enable AI tools like Copilot across your organisation, you need basic data protection and compliance frameworks in place. Not perfect, just appropriate for your business. What information can AI tools access? What can they do with it? Who’s responsible for monitoring usage?

The goal isn’t perfection. It’s having a solid enough foundation that you can experiment, learn, and scale what works without creating new problems.

With your data and technology foundation in place, success ultimately depends on whether your people can actually use these tools effectively.

Question 3: Can Your People Actually Adopt and Iterate with AI?

Technology is only half the equation. The other half is whether your team can realistically learn, use, and benefit from AI tools.

Do people have time and permission to experiment? Here’s what doesn’t work: rolling out Copilot or other AI tools and expecting people to figure them out on top of their already-full workload. Successful AI adoption requires some dedicated time for learning, testing, and adjusting workflows. Not months—but enough space to properly onboard.

Are expectations realistic? AI isn’t magic. It won’t solve problems that are actually people or process issues. It won’t replace thinking. And it definitely won’t deliver value overnight. Teams need to understand what AI can genuinely help with (automating repetitive tasks, surfacing information faster, drafting routine content) versus what it can’t (strategic thinking, relationship building, complex decision-making).

Is there a culture of learning? The organisations getting value from AI are the ones that treat it as an ongoing learning process, not a one-and-done implementation. That means being comfortable with trial and error. Sharing what works and what doesn’t. Adjusting approach based on real experience. If your culture punishes mistakes or resists change, AI adoption will be an uphill battle.

Can you build capability over time? You don’t need everyone to become an AI expert. But you do need some internal capability to assess tools, guide usage, and help people improve their skills progressively over time. Whether that’s upskilling existing staff or bringing in external support, there needs to be a path for building competence.

And remember: not everyone will embrace AI at the same pace. Some team members will dive in immediately while others need more time and support. Both approaches are valid.

What This Means for You

If you’re reading these three questions and thinking “we’ve got some work to do”—that’s completely normal. Very few SMEs tick all these boxes today.

The key is knowing where you stand so you can make informed decisions about where to invest your time and budget. Maybe you need to clean up your data environment before deploying AI tools. Maybe your technology foundation is solid but your team needs more support. Maybe you’re actually in better shape than you thought and just need confidence to start experimenting.

AI readiness isn’t about having everything perfect. It’s about having the fundamentals right so you can explore opportunities as they emerge, learn from what works, and build capability over time.

That’s how successful AI adoption actually happens in the real world—one pragmatic step at a time.